New approach to learning medical procedures using a smartphone and the Moodle platform to facilitate assessments and written feedback

Article information

Abstract

Background

To overcome communication obstacles between medical students and trainers, we designed serial learning activities utilizing a smartphone and web-based instruction (WBI) on the Moodle platform to provide clear and retrievable trainer feedback to students on an objective structured clinical examination (OSCE) item.

Methods

We evaluated students’ learning achievement and satisfaction with the new learning tool. A total of 80 fourth-year medical students participated. They installed the Moodle app (the WBI platform) on their smartphones and practiced an endotracheal suction procedure on a medical simulation mannequin while being evaluated by a trainer regarding competence in clinical skills on the smartphone app. Students’ competency was evaluated by comparing the scores between the formative assessment and the summative assessment. The degree of satisfaction and usefulness for the smartphone and WBI system were analyzed.

Results

The means (standard deviations, SDs) of the formative and summative assessments were 8.80 (2.53) and 14.24 (1.97) out of a total of 17 points, respectively, reflecting a statistically significant difference (p<0.05). The degree of satisfaction and perceived usefulness of the smartphone app and WBI system were excellent, with means (SDs) of 4.60 (0.58), and 4.60 (0.65), respectively.

Conclusion

We believe that the learning process using a smartphone and the Moodle platform offers good guidance for OSCE skill development because trainers’ written feedback is recorded online and is retrievable at all times, enabling students to build and maintain competency through frequent feedback review.

Introduction

The processes of observation and procedural practice for in-patient and out-patient care are an important part of medical education [1,2]. These processes involve corrective feedback typically provided verbally, and the majority of learning is obtained via observation and verbal feedback [3]. In general, students with limited clinical experience do not easily achieve competence by observation and verbal feedback alone, particularly because such feedback is not always clear. To facilitate procedural skill training, trainer feedback needs to be retrievable and available regardless of time and place [4-6].

Lack of timely feedback from teacher to student is a critical communication obstacle to overcome in medical education [7]. Immediate feedback allows students to refine clinical skills as they are being practiced [8,9]. Currently, feedback is typically provided verbally, but not always timely, and without the student’s continuous participation in the provided guidance, proficiency can be lost. Furthermore, memory can become distorted over time, which can alter a student’s understanding of verbal feedback. Therefore, timely written feedback is necessary so students can retrieve it later for self-directed learning [4,10,11]. The medical education field needs a reliable and tangible tool to ensure clear written feedback is continuously utilized by students for preparation of their clinical skills.

Furthermore, due to the coronavirus disease 2019 (COVID-19) pandemic, in-person education has become limited. Thus, virtual education is accepted as a new normal form of education [12,13]. Nevertheless, some aspects of medical education, such as clinical skills, are difficult to implement virtually because they require direct feedback. In this context, we needed a teaching tool to minimize in-person verbal explanation to prevent participants from being infected with the COVID-19 virus via droplets of saliva. It was believed that a modular object-oriented dynamic learning environment (Moodle) platform and smartphone app would provide an educational environment for trainers to give written feedback to students in a timely manner.

Therefore, we designed serial learning activities within the smartphone app and the Moodle platform to guarantee clear and retrievable trainer feedback. We then evaluated students’ learning achievements and their satisfaction with the new learning tool.

Methods

Ethical statements: The study was approved by the Institutional Review Board of the Kosin University Gospel Hospital (KUGH 2020-11-028). Informed written consent was exempted due to the retrospective nature of this study.

A total of 80 fourth-year medical students participated in this study. Students were asked to complete formative assessment, self-reflection, summative assessment, and a learning-tool survey (Fig. 1). All assignments were created using Moodle version 3.0 software (Martin Dougiamas, Perth, Australia; http://www.moodle.org/). Students were instructed to install the Moodle app, a web-based instruction (WBI) platform, on their smartphones (Fig. 2). They were asked to practice an “endotracheal suction” procedure (one of the objective structured clinical examination [OSCE] items used to measure a student’s clinical competence) on a medical simulation mannequin while being evaluated in person by a trainer on the smartphone app. We used the results from the students’ submitted assignments for this study.

WBI website for formative assessment, self-reflection, summative assessment, and feedback. Students accessed the web-based instruction (WBI) website for online endotracheal suction practice (A), where they completed the formative assessment (B), self-reflection (C), summative assessment (D), and survey (E). OSCE, objective structured clinical examination.

1. Formative assessment

Students were asked to learn an endotracheal suction procedure with a provided manual and movie clips on an e-learning consortium website. After that, a formative assessment was performed to evaluate the current status of each student’s skills wherein a trainer in charge of the students’ learning provided feedback based on their performance. Before the procedure, students were required to log in to the Moodle app via their smartphone and open a quiz designed as the formative assessment. The students then handed their smartphones to the trainer, who was responsible for filling out the assessment. Students began the endotracheal suction procedure in front of the trainer. Since the quiz was locked with a pass code, the quiz questions were invisible. To begin the assessment, the trainer input a passcode on the landing page of the quiz and completed the formative assessment based on the student’s demonstrated skillset.

The quiz consisted of 17 questions which dealt with critical check points for a proficient endotracheal suction procedure. Each of the questions had rated answers based on the level of student competence demonstrated. When a trainer selected one of the answers, corresponding feedback would automatically generate. However, the trainer could add additional constructive feedback if necessary. After completing the formative assessment, students would read both the algorithm-generated answers in addition to the trainer’s on-spot written feedback.

2. Self-reflection

After completing the formative assessment, students could see the quiz questions and answers as well as feedback online. The quiz results and feedback provided the students with guidelines regarding their competency in the endotracheal suction procedure. They reflected on their performance and the written feedback from the trainer and reported essays for self-reflection. We used the “assignment” activity within Moodle, on the WBI, for students to submit their self-reflection essay, in which they had the opportunity to consider their performance and correct their weaknesses.

3. Summative assessment

Three days after completing the formative assessment, the students performed another round of endotracheal suction to demonstrate their learned skillset. As in the formative assessment, students presented their smartphones right before the procedure, and the trainer assessed their performance using the Moodle app. The quiz questions on the summative assessment were the same as those on the formative assessment. The trainer compared students’ performances with those recorded during the formative assessments via quizzes and checked whether their levels of competency had improved.

4. Survey

We used the “survey” activity in Moodle to obtain student feedback about satisfaction and usefulness of the educational system utilized during this study. The survey consisted of one subjective question and 14 objective questions. Of the 14 objective questions, half asked for degree of satisfaction and half about the usefulness of the educational system. Students were asked to specify their level of agreement to a statement on a 5-point Likert scale: 1, strongly disagree; 2, disagree; 3, neither agree nor disagree; 4, agree; 5, strongly agree [14]. The subjective question was intended to collect additional opinions that had not been considered in the objective portion of the survey.

5. Statistical analysis

Cronbach’s α was measured to gauge the internal consistency of survey questions. Paired-samples t-test was used to analyze the difference of mean between the formative assessment and the summative assessment. Statistical analyses were performed using SPSS version 26 (IBM Corp., Armonk, NY, USA). Statistical significance was established at p-value<0.05.

Results

1. Difference of students' learning achievements between the formative and summative assessments

We analyzed the difference between the scores of formative and summative assessments to confirm how much students’ learning had improved. The formative and summative assessment questions were designed to check that the essential requirements had been met for performing a correct endotracheal suction procedure. Students are required to prove that they have enough knowledge and skill to safely and correctly complete the procedure without any adverse effects to the patient such as hypoxemia and pneumothorax. Many aspects have to be taken into consideration to begin the endotracheal suction procedure; depth of suction, saline instillation, pre-oxygenation, disconnection of a tube, size of suction catheter, suction ion, aseptic procedure, adverse effects, etc. The quiz questions were designed to cover all these concerns related to a safe and successful procedure. From this perspective, it was assumed that the score of formative and summative assessments represented the students’ overall proficiency in the procedure.

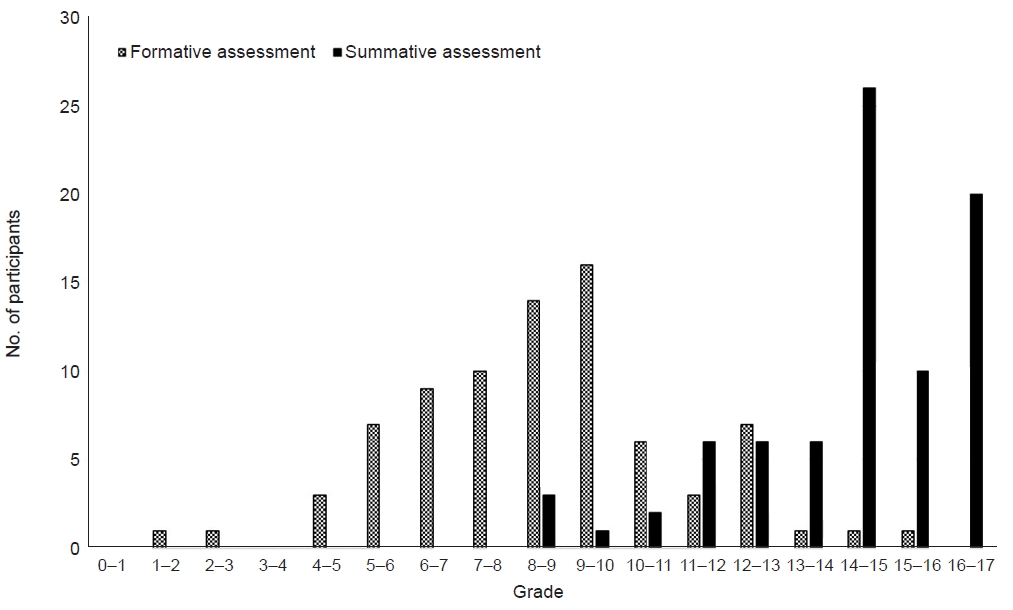

The means (standard deviations, SDs) for the formative and summative assessments were 8.80 (2.53) and 14.24 (1.97) out of a total of 17 points, respectively (Table 1). There was a significant difference in score between the two assessments (p<0.001). Students’ proficiencies have been remarkably improved (162.8% increase in mean score). Only two students scored more than 14 of 17 (2.5%) at formative assessment; this increased to 56 students (70%) at the summative assessment (Fig. 3).

2. Students' satisfaction with the new learning tool

A total of 56 students (70%) reported degree of satisfaction and usefulness of the smartphone app and WBI system (Table 2). The mean (SD) of the degree of satisfaction was 4.60 (0.58), and the mean (SD) for smartphone app and WBI system usefulness was 4.60 (0.65). The average internal consistency of the survey for degree of satisfaction and usefulness was greater than 0.9 (Cronbach’s α=0.918 and 0.919, respectively).

Students were highly satisfied with the newly introduced written feedback and assessment system via a smartphone and WBI website (question no. 3, mean=4.72). The students indicated that the written feedback was helpful (question nos. 4 and 5, mean=4.80, respectively). Students were asked whether the feedback provided during practice was consistent with that provided in a real-world situation. The students responded positively to this question. However, the degree of satisfaction was relatively lower when compared to other questions (question no. 6, mean=4.30).

About the usefulness of a smartphone and WBI system, students felt it was easy to operate (question no. 8, mean=4.58), and that it helped them reflect on their performance and maintain competence (question nos. 9 and 11, mean=4.73 and mean=4.66, respectively). They indicated that the assessments and feedback could potentially be reused for their portfolio in the future (question no. 13, mean=4.41).

Discussion

Effective feedback on medical student performance from formative or summative assessments can be a cornerstone to improve learning outcomes [15-18]. We evaluated a practical and implementable method to provide retrievable and clear feedback to medical students. To accomplish this goal, a virtual learning environment was presented in Moodle [19,20]. Smartphone use in clinical and medical education has been reported to be effective for enhancing patient care and medical education [21-23]. Therefore, we combined these two tools, Moodle platform (WBI website) and smartphone using several Moodle functions that could be implemented in both the WBI website and a smartphone app.

During formative assessment for this study, a trainer input comments as feedback on smartphones provided by students, allowing accurate and efficient feedback. We also embedded online comments that the trainer could use to answer each quiz item, which saved time and facilitated the assessment process [24]. With this approach, the students’ primary responsibility during procedural practice class with the trainer was to concentrate on the procedure based on trainer feedback. We used the quiz as the basis for feedback because many checkpoints in the endotracheal suction procedure can be presented as questions, and various degrees of competency were evaluated through quiz responses. Also, extra feedback can be included as personalized comments. Trainers had the option to provide feedback by selecting pre-provided answers or writing comments.

After completing the formative assessment, students reflected on their activities based on the feedback on the smartphone app and were able to respond by strengthening their weak points [25]. Our goal was that students would correct their mistakes during reflection, on which they were asked to submit an essay which the trainer assessed for accuracy of the students’ insights and provided additional online feedback.

Table 1 shows that students’ mean procedural skills improved significantly from 8.80 (SD, 2.53) in formative assessment to 14.24 (SD, 1.97) in summative assessment (p<0.001). Translating feedback into action through self-reflection is a process for catalyzing positive behavioral change [21]. Timely and retrievable feedback should increase self-reflection by enabling immediate corrective behavior change.

Use of a smartphone and a WBI system could be perceived as inconvenient to both students and trainers compared to in person education. However, the system used in this study was described as easy and helpful by students (Table 2). The degree of satisfaction reported by students was excellent (4.30–4.80), with excellent internal consistency (Cronbach’s α >0.9). Trainer activities in this teaching environment are more intensive than in traditional teaching because the trainer is required to record feedback on the smartphone consistently and accurately while observing the students’ performance. The automatically generated feedback helped alleviate the burden of this task.

The COVID-19 pandemic has had a significant effect on life, including society, culture, the economy, and education. It has accelerated online education, which is not common in medical training [26-28]. It is essential to guarantee quality education while maintaining social distancing during the COVID-19 pandemic [29,30]. To achieve this, student performance could be evaluated and critiqued in a hybrid way via offline practice and online communication. The method introduced herein, harnessing the Moodle online platform and a smartphone for the written feedback, can help achieve this two-pronged goal. Digital communication in medical education is gaining importance [31]. Interactive online medical activities can be a rich source of personal reflection and learning and can contribute to a student’s portfolio to be used for future professional development [32,33]. Written feedback and activities using the WBI and smartphone can be included as useful items in the portfolio because they are stored online and are easily extracted.

We only tested the system developed in our institution on one OSCE item, limiting generalizability to the rest of the OSCE items. Further studies with a larger number of items for application of this educational system are necessary. The improvements in proficiency of the endotracheal suction procedure by students could be partly ascribed to well-written feedback or a well-organized learning environment supported by the Moodle platform and use of a smartphone. However, a detailed analysis was not performed to weigh the degree of contribution between them. As stated, the Moodle platform and smartphone app facilitating the written feedback and assessments were the main factors considered in this study. Other factors which could have potentially affected the results were not analyzed. Although the difference between the formative and summative assessments is significant, this cannot fully explain whether the improvement in procedural learning has been obtained by the combination of the written feedback and the Moodle platform or through the repeated assessment and learning.

We believe a learning process using a smartphone and the Moodle platform can offer good guidance for building OSCE skills because online written feedback is retrievable at all times, allowing students to increase/maintain competency via feedback review even after completion of the OSCE class. Because the teaching method used in this study has never been reported and because teaching and learning environments vary depending on the course subject, further studies that apply this newly designed teaching method are warranted. The educational system evaluated herein could be a good alternative for clinical procedural education during periods when social distancing is necessary, such as during the COVID-19 pandemic.

Notes

Conflicts of interest

Hyunyong Hwang is an editorial board member of the journal but was not involved in the peer reviewer selection, evaluation, or decision process of this article. No other potential conflicts of interest relevant to this article were reported.

Funding

None.

Author contributions

Conceptualization: HL, HH. Data curation: SSL, HL, HH. Formal analysis: HL, HH. Methodology: HL, HH. Visualization: SSL, HL, HH. Writing - original draft: SSL, HL. Writing - review & editing: HH. Approval of final manuscript: all authors.